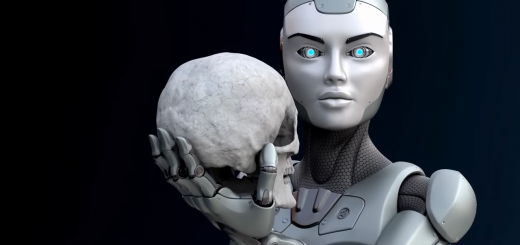

Artificial Intelligence: Can We Trust Our Creations?

The rapid advancement of technology in recent years has brought about significant changes in our lives. From smartphones to self-driving cars, we are witnessing the rise of artificial intelligence (AI) and its increasing impact on society.

However, with this progress comes a growing concern about the ethical implications of AI. As AI continues to evolve, we must ask ourselves: can we trust our creations?

Defining Artificial Intelligence

At its core, AI is the ability of machines to perform tasks that would typically require human intelligence. This includes processes such as learning, reasoning, and problem-solving. AI systems are designed to analyze vast amounts of data and make decisions based on that data. They are also capable of adapting to changing conditions and improving over time.

However, the use of AI also comes with risks. As these machines become more advanced, they can potentially make decisions that are harmful to humans. This raises important questions about the ethical implications of AI and the need for responsible development and use of these systems.

The Importance of AI Ethics

The ethical considerations of AI are crucial as we continue to create intelligent machines. In order to ensure that AI is used for the betterment of society, it is important to establish ethical guidelines and standards for its development and use. This includes addressing concerns such as privacy, security, and transparency.

By creating ethical guidelines for AI, we can ensure that these systems are designed to prioritize human values and ethics. This means that AI should be developed and used with the goal of improving the quality of life for all people, rather than just a select few.

The Dangers of Unethical AI

One of the biggest risks of AI is its potential to become uncontrollable. If left unchecked, AI systems could potentially make decisions that are harmful to humans. This could include decisions that are biased or discriminatory, or even decisions that could lead to physical harm or death.

Another danger of unethical AI is job displacement. As machines become more capable of performing tasks that were once reserved for humans, there is a risk that many jobs will become obsolete. This could lead to widespread unemployment and economic instability.

The History of AI Ethics

The ethical implications of AI have been a topic of discussion for many years. In fact, the earliest discussions of AI ethics can be traced back to the ancient Greeks and their debates about the morality of creating artificial beings.

Fast forward to the modern era, and we see a number of developments in AI ethics. In the 20th century, the rise of computer science and AI research led to increased concerns about the potential dangers of AI. This led to the creation of the field of AI ethics, which seeks to understand the moral implications of AI and establish guidelines for its use.

The Ethical Implications of AI

As AI continues to advance, there are a number of ethical implications that must be considered. One of the most pressing concerns is job displacement. As machines become more capable of performing tasks that were once performed by humans, there is a risk that many jobs will become obsolete. This could lead to widespread unemployment and economic instability.

Privacy is another significant concern when it comes to AI. As these machines collect vast amounts of data, there is a risk that this data could be used for malicious purposes. This could include the theft of personal information or the use of data to manipulate individuals or groups.

Biases and discrimination are also significant concerns when it comes to AI. If AI systems are not designed to be unbiased, there is a risk that they could perpetuate existing biases and discrimination. This could have significant consequences for individuals and groups who are already marginalized.

Military and warfare are also areas where AI ethics are particularly important. With the rise of autonomous weapons and drones, there is a risk that AI could be used to make decisions that could lead to harm or death.

AI and Society

The responsible development and use of AI is a complex issue that involves a number of different stakeholders. This includes governments, corporations, and individuals.

The role of government is particularly important when it comes to AI ethics. Governments must establish ethical guidelines and regulations for the development and use of these systems. This includes addressing concerns such as privacy, security, and transparency.

Corporations also have a responsibility to ensure that AI is used ethically. This means designing AI systems that prioritize human values and ethics, and ensuring that these systems are not used for malicious purposes.

Individuals also have a role to play in the ethical use of AI. This includes advocating for ethical guidelines and standards, and using AI systems in a responsible and ethical manner.

Current AI Ethics Frameworks

There are a number of different frameworks and guidelines that have been established in recent years to address the ethical implications of AI. These include:

- The IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems

- The Future of Life Institute’s Asilomar AI Principles

- The European Union’s Ethics Guidelines for Trustworthy AI

These frameworks seek to establish clear guidelines and standards for the ethical development and use of AI. By adhering to these guidelines, we can ensure that AI is developed and used in a responsible and ethical manner.

Conclusion

The ethical implications of AI are complex and multifaceted. In order to ensure that AI is used for the betterment of society, it is important to establish ethical guidelines and standards for its development and use. This includes addressing concerns such as privacy, security, and transparency, as well as ensuring that AI is designed to prioritize human values and ethics. By working together to create responsible and ethical AI systems, we can ensure a brighter future for all of humanity.